- Google Cloud

- Cloud Forums

- Databases

- Re: Datastream to Mysql SQL private IP not working...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using Terraform to create a DataStream that connects to a private MySQL instance in Cloud SQL. However, I'm encountering connection errors despite seemingly correct configurations.

My Terraform provisions the following resources:

- Google Cloud Networking resources (likely VPC and subnets)

- Cloud SQL Private MySQL instance

- Google DataStream Private Connection

- Compute Engine VM instance

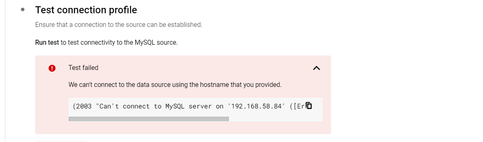

I don't understand why it is not able connect MySQL server:

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are some steps to troubleshoot the connection issues between Datastream and your private Cloud SQL MySQL instance:

1. Fundamentals: Network and Cloud SQL Setup

- VPC Peering: Double-check the peering connection status between your Datastream and Cloud SQL VPCs. Ensure routes are in place for traffic to flow correctly.

- Firewall Rules: Verify that firewall rules allow traffic on port 3306 from Datastream's IP ranges to the Cloud SQL instance.

- Authorized Networks: In your Cloud SQL settings, make sure the Datastream private connection endpoint's IP address is whitelisted. If you're using Cloud SQL Auth Proxy, add the proxy's IP and ensure Datastream points to it.

2. DataStream Configuration

- Connection Profile: Review the hostname (Cloud SQL private IP), port, username, and password. Even small typos here can prevent connections.

- Connectivity Method: Ensure "Private Connectivity" is selected in your connection profile.

3. Hands-on Testing

- Compute Engine VM: Create a VM in the same VPC (or a peered VPC) as Datastream.

- MySQL Client: Install

mysql-clienton the VM and try connecting:mysql -u your_username -p -h [Cloud SQL private IP] - Telnet: Check basic port access:

telnet [Cloud SQL private IP] 3306

4. Deeper Checks

- Datastream Logs: Look for detailed error messages in the Datastream logs.

- IAM Roles: Ensure Datastream and your VM have the correct roles (e.g.,

roles/cloudsql.client).

Additional Tips

- Simplify: If things are complex, try isolating the issue by setting up a minimal test environment. This can help eliminate unrelated configuration problems.

- Plan Ahead: Use

terraform planto get a preview of your infrastructure changes before applying them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "connection refused" error from Google Cloud Datastream to your MySQL server (192.168.59.52) likely stems from one of these:

- Incorrect hostname resolution (xxxxxx.com)

- MySQL server not listening on the expected port/interface

- Firewall rules blocking access

- Misconfiguration in your Terraform setup

Troubleshooting Steps:

-

Verify Hostname Resolution:

- From a VM in the same network, run

nslookup xxxxxx.com. The output should match your MySQL server's private IP (192.168.59.52). - If it doesn't, correct your DNS configuration.

- From a VM in the same network, run

-

Check MySQL Server Accessibility:

- Ensure MySQL is listening on the correct interface (all or the specific private IP). Check the

bind-addressin your MySQL config file (my.cnformy.ini). - Verify firewall rules allow inbound traffic on port 3306 (or your custom MySQL port) from Datastream's internal Google services or IP ranges.

- Ensure MySQL is listening on the correct interface (all or the specific private IP). Check the

-

Test Direct Connectivity:

- From a VM in the same network, try

mysql -h xxxxxx.com -u your_username -pto connect directly to the MySQL server using the hostname. - If this fails, there's likely an issue with the server itself (not Datastream).

- From a VM in the same network, try

-

Review Terraform Configuration:

- In your

google_datastream_connection_profileresource, ensure thehostnameundermysql_profiledirectly points to your MySQL server's hostname (not a Compute Engine instance unless it's specifically forwarding MySQL traffic).

- In your

Example:

resource "google_datastream_connection_profile" "source_connection_profile" {

# ... other attributes ...

mysql_profile {

hostname = "your_mysql_server_hostname" # Use the actual MySQL hostname

# ... other attributes ...

}

}Further Debugging:

If the issue persists:

- Check MySQL server and network logs for connection attempts/rejections.

- Contact Google Cloud Support for assistance, especially if you suspect a Datastream service issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can use the same Cloud SQL Auth Proxy, for multiple Google Cloud Datastream instances.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here are some steps to troubleshoot the connection issues between Datastream and your private Cloud SQL MySQL instance:

1. Fundamentals: Network and Cloud SQL Setup

- VPC Peering: Double-check the peering connection status between your Datastream and Cloud SQL VPCs. Ensure routes are in place for traffic to flow correctly.

- Firewall Rules: Verify that firewall rules allow traffic on port 3306 from Datastream's IP ranges to the Cloud SQL instance.

- Authorized Networks: In your Cloud SQL settings, make sure the Datastream private connection endpoint's IP address is whitelisted. If you're using Cloud SQL Auth Proxy, add the proxy's IP and ensure Datastream points to it.

2. DataStream Configuration

- Connection Profile: Review the hostname (Cloud SQL private IP), port, username, and password. Even small typos here can prevent connections.

- Connectivity Method: Ensure "Private Connectivity" is selected in your connection profile.

3. Hands-on Testing

- Compute Engine VM: Create a VM in the same VPC (or a peered VPC) as Datastream.

- MySQL Client: Install

mysql-clienton the VM and try connecting:mysql -u your_username -p -h [Cloud SQL private IP] - Telnet: Check basic port access:

telnet [Cloud SQL private IP] 3306

4. Deeper Checks

- Datastream Logs: Look for detailed error messages in the Datastream logs.

- IAM Roles: Ensure Datastream and your VM have the correct roles (e.g.,

roles/cloudsql.client).

Additional Tips

- Simplify: If things are complex, try isolating the issue by setting up a minimal test environment. This can help eliminate unrelated configuration problems.

- Plan Ahead: Use

terraform planto get a preview of your infrastructure changes before applying them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it! Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

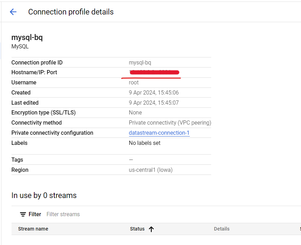

I have created resources using Terraform for DataStream to MySQL private connectivity. Previously, I created a source connection profile and used an IP address instead of a hostname with same configuration. Here is an example:

But now I want to create it using a hostname instead of an IP address. I have written Terraform code for that; however, I'm facing issues while creating it using a hostname.

Could you please help me with this issue?

Error:

Error: Error waiting for Updating ConnectionProfile: {"@type":"type.googleapis.com/google.rpc.ErrorInfo","domain":"datastream.googleapis.com","metadata":{"message":"We can't connect to the data source using the hostname that you provided. Make sure that the hostname is correct.","originalMessage":"(2003, \"Can't connect to MySQL server on '192.168.59.52' ([Errno 111] Connection refused)\")","time":"2024-04-11T12:42:25.686357Z","uuid":"0ff7fc6f-cd38-42f0-b76f-498e0e3bbfa1"},"reason":"WRONG_HOSTNAME"}

│ {"code":"VALIDATE_CONNECTIVITY","description":"Validates that Datastream can connect to the source database.","message":[{"code":"WRONG_HOSTNAME","level":"ERROR","message":"We can't connect to the data source using the hostname that you provided. Make sure that the hostname is correct.","metadata":{"original_error":"(2003, \"Can't connect to MySQL server on '192.168.59.52' ([Errno 111] Connection refused)\")"}}],"state":"FAILED"}

│ with google_datastream_connection_profile.source_connection_profile,

│ on main.tf line 143, in resource "google_datastream_connection_profile" "source_connection_profile":

│ 143: resource "google_datastream_connection_profile" "source_connection_profile" {

Terraform :

resource "google_compute_instance" "custom_hostname_instance" {

name = "custom-hostname-instance-name"

project = var.project

machine_type = var.proxy_machine_type

zone = var.zone

# Set a custom hostname below

hostname = "xxxxxx.com"

boot_disk {

initialize_params {

image = "debian-cloud/debian-11"

}

}

network_interface {

network = google_compute_network.network.name

subnetwork = google_compute_subnetwork.private-1.self_link

access_config {

// Ephemeral public IP

}

}

resource "google_datastream_connection_profile" "source_connection_profile" {

display_name = "Source connection profile"

location = var.region

connection_profile_id = "source-profile"

project = var.project

mysql_profile {

hostname = google_compute_instance.custom_hostname_instance.hostname

username = var.user_name

password = var.user_password

}

private_connectivity {

private_connection = google_datastream_private_connection.private.id

}

# depends_on = [google_datastream_private_connection.private]

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "connection refused" error from Google Cloud Datastream to your MySQL server (192.168.59.52) likely stems from one of these:

- Incorrect hostname resolution (xxxxxx.com)

- MySQL server not listening on the expected port/interface

- Firewall rules blocking access

- Misconfiguration in your Terraform setup

Troubleshooting Steps:

-

Verify Hostname Resolution:

- From a VM in the same network, run

nslookup xxxxxx.com. The output should match your MySQL server's private IP (192.168.59.52). - If it doesn't, correct your DNS configuration.

- From a VM in the same network, run

-

Check MySQL Server Accessibility:

- Ensure MySQL is listening on the correct interface (all or the specific private IP). Check the

bind-addressin your MySQL config file (my.cnformy.ini). - Verify firewall rules allow inbound traffic on port 3306 (or your custom MySQL port) from Datastream's internal Google services or IP ranges.

- Ensure MySQL is listening on the correct interface (all or the specific private IP). Check the

-

Test Direct Connectivity:

- From a VM in the same network, try

mysql -h xxxxxx.com -u your_username -pto connect directly to the MySQL server using the hostname. - If this fails, there's likely an issue with the server itself (not Datastream).

- From a VM in the same network, try

-

Review Terraform Configuration:

- In your

google_datastream_connection_profileresource, ensure thehostnameundermysql_profiledirectly points to your MySQL server's hostname (not a Compute Engine instance unless it's specifically forwarding MySQL traffic).

- In your

Example:

resource "google_datastream_connection_profile" "source_connection_profile" {

# ... other attributes ...

mysql_profile {

hostname = "your_mysql_server_hostname" # Use the actual MySQL hostname

# ... other attributes ...

}

}Further Debugging:

If the issue persists:

- Check MySQL server and network logs for connection attempts/rejections.

- Contact Google Cloud Support for assistance, especially if you suspect a Datastream service issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the guidance! I have another question: Can we use the same proxy for multiple DataStream's?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can use the same Cloud SQL Auth Proxy, for multiple Google Cloud Datastream instances.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's Great! Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have multiple instances can i use same Cloud SQL Auth Proxy for that multiple Google Cloud Datastream instances.

eg: I have 3 MySQL instances and i want to create multiple datastream for each instances for that also can use the same Cloud SQL Auth Proxy?

resource "google_compute_instance" "private" {

project = var.gcp_project_id

name = "datastream-proxy"

machine_type = var.proxy_machine_type

zone = var.gcp_zone_c

boot_disk {

initialize_params {

image = "debian-cloud/debian-11"

}

}

network_interface {

# network = data.google_compute_network.network.name

# subnetwork = data.google_compute_subnetwork.private-1.self_link

network = var.vpc_name

subnetwork = var.subnet_name

network_ip = google_compute_address.static.address

access_config {

// Ephemeral public IP

}

}

metadata_startup_script = <<EOT

#!/bin/sh

apt-get update

sudo apt-get install wget -y

wget https://dl.google.com/cloudsql/cloud_sql_proxy.linux.amd64 -O cloud_sql_proxy

chmod +x cloud_sql_proxy

./cloud_sql_proxy -instances=${data.google_sql_database_instance.main_primary.connection_name}=tcp:0.0.0.0:3306

EOT

service_account {

scopes = ["cloud-platform"]

}

}- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can indeed use a single Cloud SQL Auth Proxy to handle connections for multiple Google Cloud Datastream instances, even if these Datastream instances are intended to connect to different MySQL instances hosted on Google Cloud SQL. The Cloud SQL Auth Proxy is designed to manage connections to multiple Cloud SQL instances, making it an efficient solution for scenarios where multiple databases need to be accessible through a centralized, secure channel.

In your Terraform configuration, you can adapt the Cloud SQL Auth Proxy setup to handle connections to multiple Cloud SQL instances by specifying each instance in the startup script of the proxy server. Here’s how you can modify your Terraform script to connect to multiple MySQL instances:

Modify the metadata_startup_script to include multiple Cloud SQL instance connections. You can specify multiple instances in the Cloud SQL Auth Proxy command by separating them with commas. Here's an example of how to modify the script to handle three MySQL instances:

metadata_startup_script = <<EOT

#!/bin/sh

apt-get update

sudo apt-get install wget -y

wget https://dl.google.com/cloudsql/cloud_sql_proxy.linux.amd64 -O cloud_sql_proxy

chmod +x cloud_sql_proxy

# Assuming three Cloud SQL instances

./cloud_sql_proxy -instances=${data.google_sql_database_instance.instance1.connection_name}=tcp:3306,${data.google_sql_database_instance.instance2.connection_name}=tcp:3307,${data.google_sql_database_instance.instance3.connection_name}=tcp:3308

EOTIn this script:

- Each Cloud SQL instance is assigned a different local port (3306, 3307, 3308). This configuration allows Datastream (or any other client) to connect to different databases by targeting different ports on the same proxy server.

- Ensure that the database instance data sources (

instance1,instance2,instance3) are defined correctly in your Terraform configurations to pull the connection names.

Adjust Network Settings:

- Make sure the VM has proper network settings to communicate within your VPC and can reach the Google Cloud SQL instances. The configurations for network and subnetwork should correctly link to your VPC and subnet.

- The

network_ipshould be set if you are assigning a static internal IP address, ensuring it is accessible from where the Datastream instances will be running.

Service Account Permissions:

- The service account attached to the Compute Engine instance running the Cloud SQL Auth Proxy should have appropriate permissions (roles/cloudsql.client or broader as necessary) to connect to all specified Cloud SQL instances.

Security Considerations:

- Even though you can use public ports (3306, 3307, 3308) as in the example, ensure that only authorized services within your network can access these ports. Use VPC firewall rules to restrict access as needed.

Testing and Validation:

- After setting up, test the connectivity from a client within the same network to ensure that it can connect to each MySQL instance via the respective ports specified in the proxy configuration.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have configured multiple instances in Datastream and created multiple DataStream's for each one.

In my case, I already have multiple datasets and tables in BigQuery.

Now, I want to replicate newly added data into BigQuery in the same dataset, but I am not able to replicate it. I am facing the following issues:

Is datastream not able to replicate data in same dataset in same table or it is creating new dataset and table for each?

resource "google_datastream_connection_profile" "source_connection_profile1" {

display_name = "mysql-bq-source1"

project = var.gcp_project_id

location = var.gcp_region

connection_profile_id = "source-profile1"

mysql_profile {

hostname = google_compute_instance.private.network_interface.0.network_ip

username = data.google_secret_manager_secret_version.app_user_secret.secret_data

password = data.google_secret_manager_secret_version.app_pwd_secret.secret_data

}

private_connectivity {

private_connection = google_datastream_private_connection.private.id

}

depends_on = [

google_compute_address.static,

data.google_secret_manager_secret_version.app_pwd_secret,

data.google_secret_manager_secret_version.app_user_secret

]

}

resource "google_datastream_connection_profile" "destination_connection_profile1" {

display_name = "mysql-bq-destination1"

location = var.gcp_region

connection_profile_id = "destination-profile1"

project = var.gcp_project_id

bigquery_profile {}

}

# Create Datastream Stream

resource "google_datastream_stream" "mysql_to_bigquery1" {

display_name = "mysql_to_bigquery1"

location = var.gcp_region

project = var.gcp_project_id

stream_id = "mysql_to_bigquery1"

desired_state = "NOT_STARTED"

source_config {

source_connection_profile = google_datastream_connection_profile.source_connection_profile1.id

mysql_source_config {

include_objects {

mysql_databases {

database = "ss_v2_db"

mysql_tables {

table = "transaction_monitors"

}

}

}

}

}

destination_config {

destination_connection_profile = google_datastream_connection_profile.destination_connection_profile1.id

bigquery_destination_config {

source_hierarchy_datasets {

dataset_template {

location = var.gcp_region

# kms_key_name = "bigquery-kms-name"

}

}

}

}

backfill_none {

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error "BIGQUERY_DYNAMIC_DATASET_LOCATION_CONFLICT", "level": "ERROR", "message": "Datastream can't create one or more BigQuery datasets in the specified location, because datasets with the same name already exist in a different location.", "metadata": {"dataset_conflicts":"ss_v2_db (US) "}}],"state": "FAILED" }" is related to a location conflict for BigQuery datasets. According to the error message, it appears that Datastream is attempting to create or write to a dataset in a location that conflicts with existing datasets.

- Datastream can't create one or more BigQuery datasets in the specified location, because datasets with the same name already exist in a different location.

- The BigQuery dataset you are trying to write to via Datastream is set up in a different geographic location than your Datastream destination configuration specifies.

Here’s how to troubleshoot and potentially resolve this issue:

-

Check Dataset Location:

- Ensure that the BigQuery dataset you want Datastream to write to is in the same region as specified in your Datastream configuration. You can check the dataset's location in the BigQuery UI or using the

bqcommand-line tool:bq show --format=prettyjson your-dataset - Look for the "location" field in the output.

- Ensure that the BigQuery dataset you want Datastream to write to is in the same region as specified in your Datastream configuration. You can check the dataset's location in the BigQuery UI or using the

-

Update Datastream Configuration:

- If the locations mismatch, you have two options:

- Change the Datastream Configuration: Modify the

locationin yourdataset_templateto match the existing BigQuery dataset's location. Here is the modified section of your Terraform script:bigquery_destination_config { source_hierarchy_datasets { dataset_template { location = "YOUR_DATASET_LOCATION" # Match this with your BigQuery dataset location } } } - Migrate the BigQuery Dataset: If feasible, you might consider moving your BigQuery dataset to the desired location. However, this can be complex and is not commonly recommended due to the potential for data transfer costs and complexities.

- Change the Datastream Configuration: Modify the

- If the locations mismatch, you have two options:

-

Reapply Terraform Configuration:

- After making necessary changes to your Terraform configuration, apply the changes:

terraform apply - Monitor the output for any errors to confirm that the issue has been resolved.

- After making necessary changes to your Terraform configuration, apply the changes:

-

Validate and Monitor:

- Once Terraform successfully applies your configuration, monitor the Datastream and BigQuery to ensure data replication starts and operates as expected.

- Check for any delays or issues in the replication process, and verify data integrity in the destination tables.

-

Logging and Debugging:

- If problems persist, check the Google Cloud Console under Datastream and BigQuery sections for logs and error messages. These can provide more detailed insights into what might be going wrong.

- If problems persist, check the Google Cloud Console under Datastream and BigQuery sections for logs and error messages. These can provide more detailed insights into what might be going wrong.

If you continue to encounter difficulties, review the Google Cloud Datastream documentation for any updates or notices regarding limitations or configuration specifics.

-

-

- As a last resort, contacting Google Cloud support can help, especially if the configurations and setup seem correct but errors persist.

-

Twitter

Twitter