- Looker

- Looker Forums

- Modeling

- [Analytics Block] LLMs in LookML

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you want to enable your Looker users to leverage large language models on your text data?

This analytic block walks you through how to leverage the ML.GENERATE_TEXT function in LookML to perform generative natural language tasks on text data stored in BigQuery. Example language tasks include the following:

- Classification

- Sentiment Analysis

- Entity extraction

- Extractive Question Answering

- Summarization

- Rewriting text in a different style

- Ad copy generation

- Concept ideation

The full block can be found here, and the following example is built on the CFPB Consumer Complaint Database public dataset.

Implementation Steps

- Follow the instructions here to create a remote function connection, grant the service account access, and create the remote model. Ensure that the BigQuery service account that Looker is using has access to the model

- Copy the views and model in the consumer_complaint_database folder and the project manifest in this repo to your Looker instance

- Update the big_query_model_name constant in the manifest file to your model name created in step 1

- Update the connection name in the model file to your BigQuery connection

Project Files

manifest.lkml [link]

constant: big_query_model_name {

value: "YOUR_PROJECT_NAME.DATASET_NAME.MODEL_NAME"

}

constant: generate_text_table_name {

value: "bigquery-public-data.cfpb_complaints.complaint_database"

}

constant: generate_text_primary_key {

value: "complaint_id"

}

constant: generate_text_text_field {

value: "consumer_complaint_narrative"

}

The manifest file contains the constants used in the LookML project. Replace the big_query_model_name with the model you have created in step 1.

generate_text_dt.view.lkml [link]

view: generate_text {

derived_table: {

sql:

SELECT

@{generate_text_primary_key}

, prompt

, ml_generate_text_result

FROM ML.GENERATE_TEXT(

MODEL `@{big_query_model_name}`, (

SELECT

@{generate_text_primary_key}

, CONCAT({% parameter prompt_input %},': """', @{generate_text_text_field}, '"""') AS prompt

FROM @{generate_text_table_name} AS model_query

WHERE TRUE

{% if complaint_database.company_name._is_filtered %} AND {% condition complaint_database.company_name %} model_query.company_name {% endcondition %} {% endif %}

...

),

STRUCT(

{% if max_output_tokens._parameter_value > 1024 or max_output_tokens._parameter_value < 1 %} 50 {% else %} {% parameter max_output_tokens %} {% endif %} AS max_output_tokens

, {% if temperature._parameter_value > 1 or temperature._parameter_value < 0 %} 1.0 {% else %} {% parameter temperature %} {% endif %} AS temperature

, {% if top_k._parameter_value > 40 or top_k._parameter_value < 1 %} 40 {% else %} {% parameter top_k %} {% endif %} AS top_k

, {% if top_p._parameter_value > 1 or top_p._parameter_value < 0 %} 1.0 {% else %} {% parameter top_p %} {% endif %} AS top_p

)

)

;;

}

This derived table shows you the SQL pattern to leverage the ML.GENERATE_TEXT function in LookML and take user input as parameters for the text prompt and function arguments.

The liquid _is_filtered conditions are used to mirror any filters on the base table to ensure that the Vertex AI APIs are only run on the final result set for performance reasons.

There are five parameters defined on this view:

prompt_input - This is where users can ask their question. The text will be prepended to the text field that is being analyzed by the model. Prompt design can strongly affect the responses returned by the model. For more information, see Overview of text prompt design.

max_output_tokens - This sets the maximum number of tokens that the model outputs. Specify a lower value for shorter responses and a higher value for longer responses.

temperature - This is used for sampling during the response generation, which occurs when top_k and top_p are applied. It controls the degree of randomness in token selection. Lower temperature values are good for prompts that require a more deterministic and less open-ended or creative response, while higher temperature values can lead to more diverse or creative results.

top_k - This changes how the model selects tokens for output. Specify a lower value for less random responses and a higher value for more random responses.

top_p - This changes how the model selects tokens for output. Specify a lower value for less random responses and a higher value for more random responses.

Review the arguments section for a full description of each parameter.

For the model results, the ML.GENERATE_TEXT function outputs a column labeled ml_generate_text_result in the following JSON format:

{

"predictions": [

{

"citationMetadata": { "citations": [] },

"content": "Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt...",

"safetyAttributes": { "blocked": false, "categories": [], "scores": [] }

}

]

}

The content key contains the text result of the function, and we can extract that value in LookML using the JSON_VALUE function:

dimension: text_result {

type: string

sql: JSON_VALUE(${TABLE}.ml_generate_text_result, '$.predictions[0].content') ;;

html:<div style="white-space:pre">{{value}}</div>;;

}

consumer_complaint_database.model.lkml [link]

connection: "YOUR_BQ_CONNECTION_NAME"

include: "./*.view.lkml"

datagroup: consumer_complaint_database_default_datagroup {

max_cache_age: "1 hour"

}

persist_with: consumer_complaint_database_default_datagroup

explore: complaint_database {

always_filter: {

filters: [

generate_text.prompt_input: "",

generate_text.max_output_tokens: "",

generate_text.temperature: "",

generate_text.top_k: "",

generate_text.top_p: ""

]

}

join: generate_text {

type: left_outer

relationship: one_to_one

sql_on: complaint_database.@{generate_text_primary_key} = generate_text.@{generate_text_primary_key} ;;

}

}

This is where we join our derived table to the base table. Replace the connection with the name for your BigQuery connection set up in Looker.

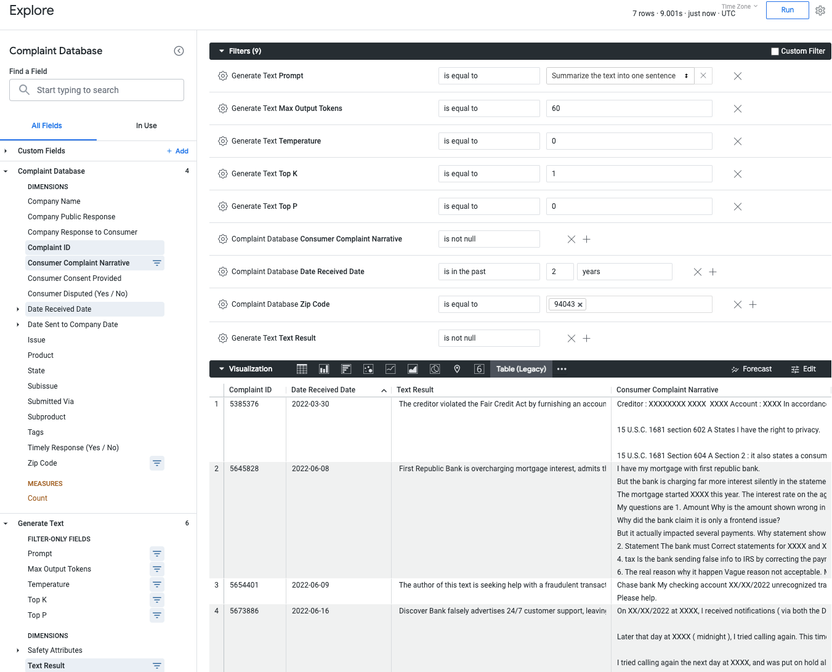

Running the Model

Now that we have the LookML files ready, we can query the dataset directly from the Looker Explore 🎉

Note that there is a quota of 60 requests per minute for the ML.GENERATE_TEXT function (i.e. ~1 minute per 60 rows), so keep result sets small in order to have performant queries.

Modifying this block for your own data

You can easily adapt this block to your own data by:

- Update the constants to point to a different table, primary key, and text field

- Update the Explore to use a different view as the base table

- Labels:

-

Analytics Block

-

sql

-

access grant

6 -

actionhub

1 -

Actions

8 -

Admin

7 -

Analytics Block

27 -

API

25 -

Authentication

2 -

bestpractice

7 -

BigQuery

69 -

blocks

11 -

Bug

60 -

cache

7 -

case

12 -

Certification

2 -

chart

1 -

cohort

5 -

connection

14 -

connection database

4 -

content access

2 -

content-validator

5 -

count

5 -

custom dimension

5 -

custom field

11 -

custom measure

13 -

customdimension

8 -

Customizing LookML

119 -

Dashboards

144 -

Data

7 -

Data Sources

3 -

data tab

1 -

Database

13 -

datagroup

5 -

date-formatting

12 -

dates

16 -

derivedtable

51 -

develop

4 -

development

7 -

dialect

2 -

dimension

46 -

done

9 -

download

5 -

downloading

1 -

drilling

28 -

dynamic

17 -

embed

5 -

Errors

16 -

etl

2 -

explore

58 -

Explores

5 -

extends

17 -

Extensions

9 -

feature-requests

6 -

filter

220 -

formatting

13 -

git

19 -

googlesheets

2 -

graph

1 -

group by

7 -

Hiring

2 -

html

19 -

ide

1 -

imported project

8 -

Integrations

1 -

internal db

2 -

javascript

2 -

join

16 -

json

7 -

label

6 -

link

17 -

links

8 -

liquid

154 -

Looker Studio Pro

1 -

looker_sdk

1 -

LookerStudio

3 -

lookml

858 -

lookml dashboard

20 -

LookML Foundations

54 -

looks

33 -

manage projects

1 -

map

14 -

map_layer

6 -

Marketplace

2 -

measure

22 -

merge

7 -

model

7 -

modeling

26 -

multiple select

2 -

mysql

3 -

nativederivedtable

9 -

ndt

6 -

Optimizing Performance

30 -

parameter

70 -

pdt

35 -

performance

11 -

periodoverperiod

16 -

persistence

2 -

pivot

3 -

postgresql

2 -

Projects

7 -

python

2 -

Query

3 -

quickstart

5 -

ReactJS

1 -

redshift

10 -

release

18 -

rendering

3 -

Reporting

2 -

schedule

5 -

schedule delivery

1 -

sdk

5 -

singlevalue

1 -

snowflake

16 -

sql

222 -

system activity

3 -

table chart

1 -

tablecalcs

53 -

tests

7 -

time

8 -

time zone

4 -

totals

7 -

user access management

3 -

user-attributes

9 -

value_format

5 -

view

24 -

Views

5 -

visualizations

166 -

watch

1 -

webhook

1 -

日本語

3

- « Previous

- Next »

Twitter

Twitter