- Google Cloud

- Cloud Forums

- AI/ML

- Chirp Model with Multi Channel Recordings Seems Br...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am investigating the benefits of upgrading from the V1 Phone Call "Enhanced" model to Chirp Telephony in order to generate more accurate transcriptions that originate from phone calls. I used the Google Console UI to test these two models, and was quite surprised to find that the Chirp model appears to be broken.

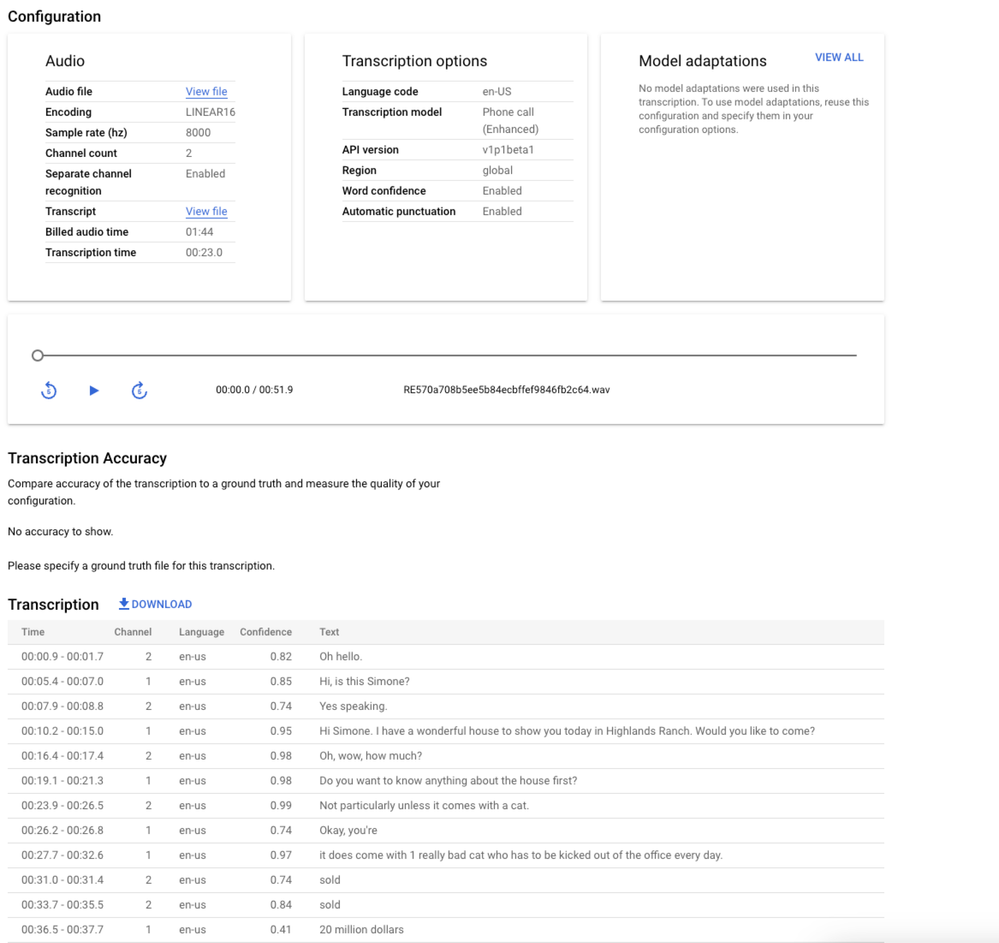

I used a 52 second call recording with 2 channels to test this.

V1 Model results appear to be fine. They split out by channel and timestamp as expected. Additionally I can add punctuation as well.

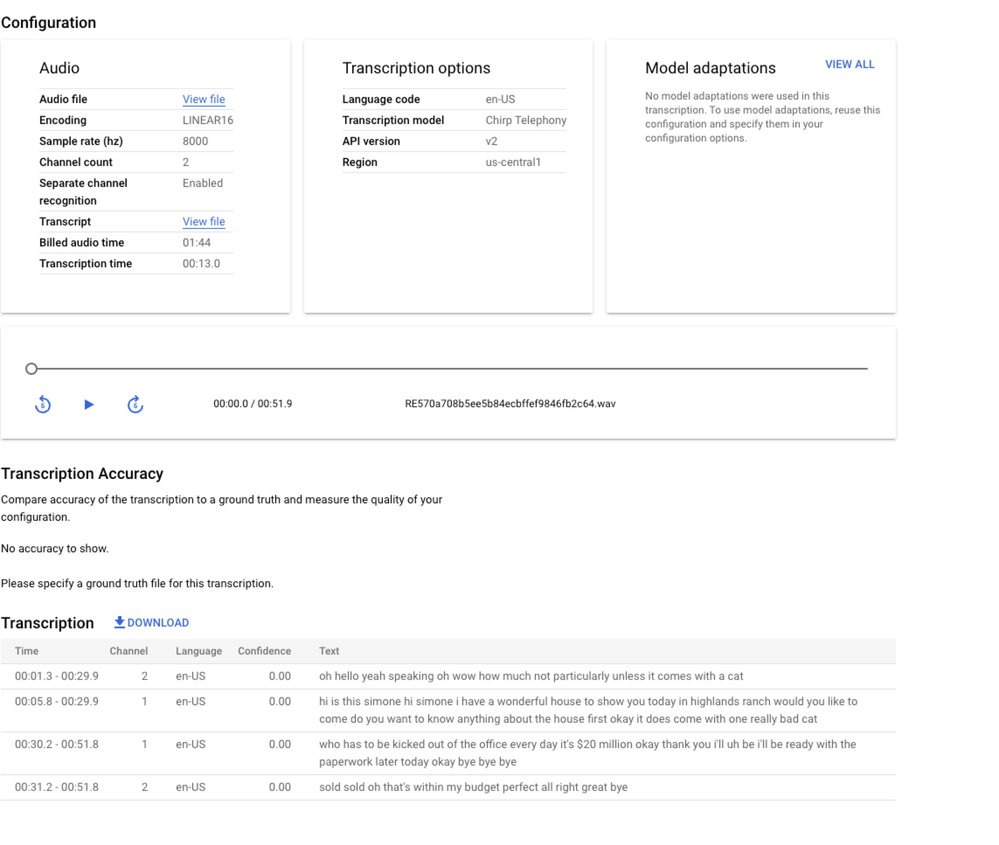

Here is the output of the Chirp Telephony model with the same recording. You can see that it is remarkably worse in comparison. Beyond the no punctuation available, the model doesn't appear to be splitting things out properly by channel at all.

This is so bad that I have to wonder, am I doing something wrong? Am I not understanding the purpose of "Chirp" as a drop-in replacement for V1 Speech models?

- Labels:

-

Speech-to-Text

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Based on the Google public documentation,

“Chirp processes speech in much larger chunks than other models do. This means it might not be suitable for true, real-time use.”

Currently, many of the Speech-to-Text features are not supported by the Chirp model. See below for specific restrictions:

- Confidence scores: The API returns a value, but it isn't truly a confidence score.

- Speech adaptation: No adaptation features supported.

- Diarization: Automatic diarization isn't supported.

- Forced normalization: Not supported.

- Word level confidence: Not supported.

- Language detection: Not supported.

Chirp does support the following features:

- Automatic punctuation: The punctuation is predicted by the model. It can be disabled.

- Word timings: Optionally returned.

- Language-agnostic audio transcription: The model automatically infers the spoken language in your audio file and adds it to the results.

On the other hand, STT V1 transcription models such as phone_call are best and trained to recognize speech recorded over the phone. These models produce more accurate transcription results.

Hope this helps.

-

AI ML

1 -

AI ML General

524 -

AutoML

198 -

Bison

25 -

Cloud Natural Language API

89 -

Cloud TPU

26 -

Contact Center AI

47 -

cx

1 -

Dialogflow

380 -

Document AI

150 -

Gecko

2 -

Gemini

125 -

Gen App Builder

72 -

Generative AI Studio

119 -

Google AI Studio

37 -

Looker

1 -

Model Garden

34 -

Otter

1 -

PaLM 2

28 -

Recommendations AI

59 -

Scientific work

1 -

Speech-to-Text

106 -

Tensorflow Enterprise

2 -

Text-to-Speech

79 -

Translation AI

90 -

Unicorn

2 -

Vertex AI Model Registry

194 -

Vertex AI Platform

689 -

Vertex AI Workbench

95 -

Video AI

21 -

Vision AI

122

- « Previous

- Next »

| User | Count |

|---|---|

| 12 | |

| 3 | |

| 1 | |

| 1 | |

| 1 |

Twitter

Twitter